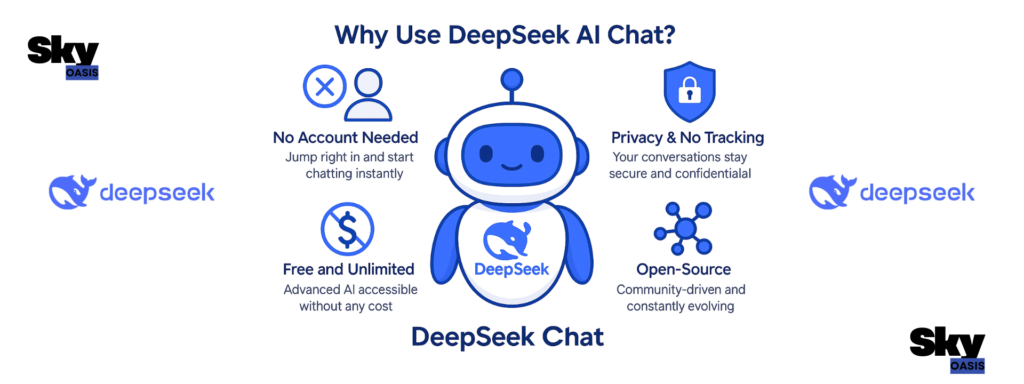

DeepSeek AI has emerged as a transformative force in artificial intelligence, challenging proprietary giants with its open-source large language models (LLMs) that deliver near-frontier performance at a fraction of the cost. Founded in 2023 as a research lab under High-Flyer Capital Management in Hangzhou, China, DeepSeek prioritizes foundational AI exploration over short-term profits, rapidly scaling from DeepSeek LLM to flagships like DeepSeek-V3.2 and R1. This Chinese AI powerhouse, led by hedge fund veteran Liang Wenfeng, has garnered billions in valuation through efficient Mixture-of-Experts (MoE) architectures, achieving state-of-the-art benchmarks in coding, math, and reasoning tasks.

What sets DeepSeek apart in the crowded LLM arena? Its commitment to open-source accessibility—models like DeepSeek-V3 boast over 671 billion parameters yet activate only 37 billion per query for unmatched efficiency—has democratized advanced AI for developers worldwide. As demand surges, with API usage exploding amid 300% price hikes still undercutting OpenAI’s GPT-4o, DeepSeek signals a paradigm shift toward cost-effective, high-performance intelligence. This article delves into DeepSeek’s architecture, key models, benchmarks, applications, and future roadmap, offering expert insights for AI enthusiasts, developers, and business leaders seeking to leverage DeepSeek models in 2025.

DeepSeek Company Background: From Finance to AI Frontier

Origins and Leadership (DeepSeek AI)

DeepSeek traces its roots to 2023, when Liang Wenfeng, a Guangdong-born hedge fund entrepreneur, pivoted from High-Flyer Capital to establish the firm with a mission to probe intelligence’s core principles. Unlike profit-driven startups, DeepSeek operates as a research entity, fostering open-source collaboration to advance the AI ecosystem. This philosophy propelled rapid innovation, from initial LLMs to multimodal capabilities, attracting investments from Alibaba, China Investment Corp, and the National Social Security Fund amid infrastructure-straining demand.

Funding and Growth Trajectory

By early 2025, DeepSeek pursued its first major external funding round, valuing efficiency over hype—training costs remain a fraction of competitors like OpenAI. Explosive adoption led to outages and a 300% API price surge (e.g., V3 input tokens from $0.14 to $0.27 per million), yet rates stay competitive at $1.1 per million output tokens versus GPT-4o’s premiums. GitHub repositories like DeepSeek-Coder-V2 have amassed stars rivaling Llama, underscoring community trust.

Key milestones include:

- May 2023: DeepSeek launch with inaugural LLM.

- 2024: DeepSeek-V2 and Coder-V2 break closed-source barriers in code intelligence.

- 2025: V3.2 release enhances agentic AI; API platform scales globally.

Core DeepSeek AI Models: Architecture and Innovations (DeepSeek AI)

DeepSeek-V3 Family: Efficiency Meets Power

DeepSeek-V3, the cornerstone 671B-parameter MoE model, powers chat, coder, and math variants with 256 routed experts per layer (up from V2’s 160) and one always-active shared expert. Activating just 37B parameters per forward pass via dynamic gating and load-balancing loss slashes compute by 80% while rivaling GPT-4.5 in math/coding. Recent updates like V3-0324 incorporate RL-inspired post-training for superior tool-use; V3.1-Terminus boosts agent stability; V3.2-Exp introduces Sparse Attention for long-context (128K tokens standard, up to 1M enterprise).

DeepSeek-R1: Reasoning Revolution

Built on V3 base, R1 refines reasoning via advanced transformers, sparse attention, and FP8 pre-training, excelling in math (87.5% AIME 2025) and coding. Its MoE sparsity handles long contexts efficiently, supporting multimodal inputs in VL variants.

Specialized siblings:

- DeepSeek-Coder-V2: 91% HumanEval, outperforming GPT-4o in code (73.5% MBPP).

- DeepSeek-Math: Tailored for proofs and theorems.

- DeepSeek-VL: Vision-language for image analysis.

DeepSeek AI Benchmarks: Outpacing Industry Leaders

DeepSeek models dominate open-source leaderboards, often surpassing closed rivals in cost-sensitive metrics. V3-0324 beats GPT-4.5 in math/coding; R1 edges Gemini/GPT/Llama in classification but trails Claude slightly.

DeepSeek’s edge: Lower latency, 92-307% cheaper post-promo. In authorship/citation tasks, R1 outperforms all but Claude.

Expert insight: For production, prioritize V3.2-Exp’s Sparse Attention—reduces inference time 2x on long docs.

DeepSeek AI Applications and Use Cases: Real-World Impact

DeepSeek’s versatility spans industries, from coding assistants to enterprise agents. Pretrained on vast multilingual data, it excels in research, writing, and analysis.

Top use cases:

- Coding/Debugging: Generate snippets, explain concepts—V3.1 scores 54.5% SWE-bench Multilingual.

- Data Analysis: Trend spotting, visualization proposals; automate reports.

- Project Management: To-do lists, meeting summaries.

- Learning Tools: Tutorials, translations; math proofs via DeepSeek-Math.

- Business Apps: Sentiment analysis, policy breakdowns, cybersecurity anomaly detection.

DeepSeek API: Pricing, Integration, and Scalability

Post-2025 promo, DeepSeek API remains economical:

| Model | Input (Cache-Hit)/M | Output/M | Notes |

|---|---|---|---|

| V3-chat | $0.27 | $1.1 | 300% hike, still beats GPT-4o |

| R1 | Comparable | N/A | Agent-optimized |

Features: 128K context, hybrid modes, global availability via open platform. Status: High demand causes occasional throttling.

Pro tip: Cache hits slash costs 50%; batch queries for throughput.

Challenges and Controversies: Navigating Risks (DeepSeek AI)

DeepSeek faces scrutiny over data privacy, censorship in Chinese models, and security—users report biases in sensitive topics. Yet, open-source transparency mitigates risks, with FP8 optimizations ensuring edge-deployable lightness.

DeepSeek Roadmap: Multimodal and Beyond

2025-2026 plans: Audio multimodal, edge models, open RL environments for safety. V3.2 rollout emphasizes agents (31.3% Terminal-bench). Expect V4 with 1M+ tokens standard.

FAQ (DeepSeek AI)

What is DeepSeek AI and its main models?

DeepSeek AI is a Chinese open-source LLM developer offering V3.2 (flagship MoE), R1 (reasoning), and Coder-V2 for coding/math.

How does DeepSeek AI compare to GPT-4o or Claude?

DeepSeek leads in cost-efficiency and open-source coding/math (e.g., 66% SWE-bench vs. GPT-4.1’s 55%), trailing Claude in some accuracy but excelling in speed.

Is DeepSeek API free or paid?

Free tier via web/app; API paid post-promo ($0.27-$1.1/M tokens), cheaper than rivals.

Can I fine-tune DeepSeek AI models?

Yes, fully open-source on Hugging Face—use SFT/RLHF for custom tasks.

What are DeepSeek’s top benchmarks?

66% SWE-bench, 87.5% AIME, 91% HumanEval—tops open-source charts.

Conclusion: Why DeepSeek AI Defines Accessible AI Excellence

DeepSeek AI redefines large language model innovation through open-source efficiency, benchmark dominance, and scalable applications, positioning it as the go-to for 2025’s AI builders. From V3.2’s agent prowess to R1’s reasoning depth, its MoE architecture delivers enterprise-grade power without proprietary lock-in. Developers and firms: Integrate today via API or GitHub for transformative gains. As Liang Wenfeng envisions, DeepSeek propels the ecosystem forward—explore, deploy, innovate.